Now that you’ve outlined your respondents, pathing, and screener, you can start authoring your main survey questions. Like deals, each due diligence survey is unique depending on your client’s needs. However, there are certain question types and design best practices proven to yield useful and higher quality data points across a variety of project types.

Note: this is the second chapter in a series: "The Consultant's Guide to Diligence Surveys." Download the full guide here.

Common Question Types

Below, we’ve outlined a number of common question types that you may wish to consider during the survey authoring process:

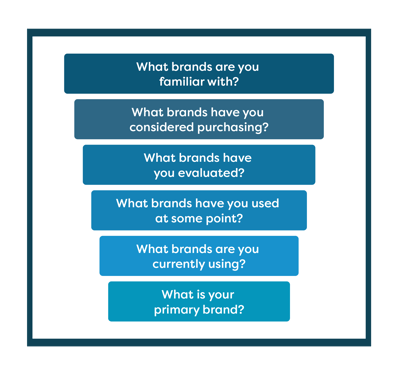

Brand Awareness

Oftentimes, your survey will aim to understand respondent familiarity with specific brands or products. A brand awareness funnel can fulfill this objective through a series of multi-select

questions that map to the funnel stages. Make sure you customize the stages based on your model movers and deal killers!

Net Promoter Score (NPS)

NPS is commonly used by our clients to reveal respondent sentiment for different brands. Be sure to ask NPS questions early in the survey to avoid biasing the results with other questions. You should typically only ask NPS of current users of the brands/products you’re diligencing;

if you need to include lapsed users in your sample, make sure you reweight NPS before comparing scores across brands!

Key Performance Criteria (KPC)

Key performance criteria (also called key purchasing criteria), refer to the most important factors respondents consider when purchasing a product or service.

There are two common objectives to address in surveys:

- Understand the most important criteria for respondents’ evaluation and decision-making.

- Determine different vendors’ performance on said criteria.

Switching

Switching questions are useful for determining stickiness of brands and products. These questions might ask respondents about lapsed use of a brand, reasons for switching from one brand or vendor to another, or the frequency or format of an RFP.

Pricing

Diligence surveys frequently include a pricing component, like pricing trends or cross-product/segment comparisons. In most cases, answer choices for these questions will involve ranges rather than specific percentages or dollar amounts to adhere to compliance requirements.

Types of pricing questions include:

- Spending / share of wallet to determine spend on a particular product or brand.

- Share of budget to determine what percentage of budget a department or individual spends, or is thinking of spending, on a particular product or brand.

- Spend vs. KPC to determine optimal service or product features for a specific price point.

Note that structuring questions to gather this information is generally very difficult. We strongly recommend you reach out to your survey provider for assistance!

Managing User Fatigue

As you author your survey, you also need to think about how to ensure users will complete the survey. Respondents have a limited attention span for answering survey questions, so it’s critical you design your survey with user fatigue in mind.

One key risk is users abandoning the survey, which will delay the speed you collect data and may affect your overall project timeline. Even worse, some respondents may provide random answers to finish the survey more quickly, an issue which is difficult to identify and risks skewing the validity of the data and analysis you deliver. You also risk wasting valuable responses that may be difficult to replace.

Deciding if the survey is likely to tire out the respondents relies on your and your product team’s judgment. The optimum number of questions for a survey varies depending on the type of information needed, the topic of the survey, and the complexity and repetitiveness of the questions. In general, longer questions (such as combination questions or those using matrices) have higher abandonment rates.

Thoughtfulness about click volume improves response accuracy and limits abandonment. When designing your survey, view minimizing user fatigue as limiting the number of clicks per question, i.e. the number of times a respondent must interact with the survey. This could include actions like selecting a check box, typing in a number, etc.

Some best practices for limiting click volume include:

- Use loops instead of matrices.

- Avoid complicated combination questions.

- When you don’t require specific data points, use ranges instead, e.g. for age, use multiple choice questions like 18-25, 26-35, etc., instead of asking the respondent to type in a number.

- Use drop-down menus sparingly. They require twice as many clicks as a multiple-choice question— one to open and one to make the answer selection.

- Avoid free text fields as much as possible. Not only do they fatigue users, they also make data more difficult to analyze.

A final key to limiting respondent fatigue is to optimize questions across desktop and mobile as most respondents taking surveys are doing so on a mobile platform. The easiest way to do this is to design short questions so that respondents don’t have to scroll. In matrix questions, for example, use fewer than five columns and limit answer options.

In the third and final chapter, we'll cover working with panel providers and how to test your survey effectively for optimized results.